Brain Cells Use a Telephone Trick to Report What They See

Single neurons communicate about multiple objects by rapidly switching which one they are reporting on

DURHAM, N.C. -- “How many fingers am I holding up?”

For vision-sensing brain cells in a monkey’s visual cortex, that answer depends on whether the digits are next to each other or partially overlapping.

A new study from Duke University finds that single neurons conveying visual information about two separate objects in sight do so by alternating signals about one or the other. When two objects overlap, however, the brain cells detect them as a single entity.

The new report is out Nov. 28 in the journal eLife.

The findings help expand what is known about how the brain makes sense of its complicated and busy world. Most research on sensory processing, be it sounds or sights, sets the bar too low by testing how brain cells react to a single tone or image.

“There are lots of reasons to keep things simple in the lab,” said Jennifer Groh, Ph.D., a faculty member of the Duke Institute for Brain Sciences and senior author of the new report. “But it means that we're not very far along in understanding how the brain encodes more than one thing at a time.”

Making sense of complicated sensory information is somewhat of a specialty for Groh. In 2018, her lab was the first to show that single auditory brain cells efficiently transmit information about two different sounds by using something called multiplexing.

“Multiplexing is an idea that comes from engineering,” Groh said. “When you have one wire and a lot of signals, you can swap the signals out, kind of like a telephone party line.”

The telecommunications technology works by rapidly switching back-and-forth between relaying information from one phone call and the other using just one wire. In the brain, the switching is probably happening much more slowly, Groh said, but the general idea is similar.

Na Young Jun, a graduate student in neurobiology at Duke and the lead author of the paper, first learned about how auditory neurons do this telephone wiring trick during a lecture Groh gave as part of a neuroscience boot camp course.

“I thought the concept of multiplexing was fascinating,” Jun said. “I wanted to talk more with Dr. Groh about it out, so I went to her office and ending up working in her lab.”

During her time in the Groh lab, Jun was given a valuable dataset from Groh’s collaborator Marlene Cohen, Ph.D., a professor of neurobiology at the University of Chicago and co-author on the paper. Cohen’s group had collected brain activity data from macaques while they watched pictures on a screen in an effort to study attention.

“Collecting data from monkeys is super hard,” Jun said. “It can take seven years of a grad student’s life to collect just a few gigabytes of data.”

The shared dataset proved to be just as efficient as the brain cells Jun then analyzed.

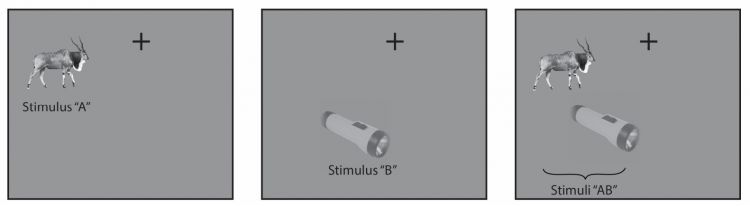

With help from Groh’s collaborator Surya Tokdar, Ph.D., a professor of statistical sciences at Duke and co-author on the paper, Jun found that a subset of cells in the visual cortex, considered a “higher order” brain region in the visual processing pathway, switch between reporting on two different images across trials.

“Say you have a visual cortex neuron,” Jun said. “When it just sees a backpack, it fires 20 times a second. When it just sees a coffee cup, it fires five times a second. But when that same neuron sees the backpack and the coffee cup next to each other, it alternates firing 20 times a second and five times a second.”

However, if two objects overlap, like placing a coffee cup in front of a backpack, the brain cells fire the same way each time the eclipsing objects are presented. This suggests that the neurons treated overlapping images as a single object rather than separate ones.

While the real world is much busier than just two side-by-side objects, this work starts to move sensory research to better reflect everyday perception for the brain.

“Considering how the brain preserves information about two visual stimuli presented is still a far cry from understanding how the myriad details present in a natural scene are encoded,” Jun and her co-authors write in their report. “More studies are still needed to shed light on how our brains operate outside the rarefied environment of the laboratory.”

Support for the research came from the U.S. National Institutes of Health (R00EY020844; R01EY022930; Core Grant P30 EY008098s; R01DC013906; R01DC016363), the McKnight Foundation, the Whitehall Foundation, the Sloan Foundation, and the Simons Foundation.

CITATION: “Coordinated Multiplexing of Information About Separate Objects in Visual Cortex,” Na Young Jun, Douglas A. Ruff, Lily E. Kramer, Brittany Bowes, Surya T. Tokdar, Marlene R. Cohen, Jennifer M. Groh. eLife, Nov. 29, 2022. DOI: 10.7554/eLife.76452.sa0

Online - https://elifesciences.org/articles/76452